Getting Started with GPT-4o: A Beginner's Guide to Multimodal AI

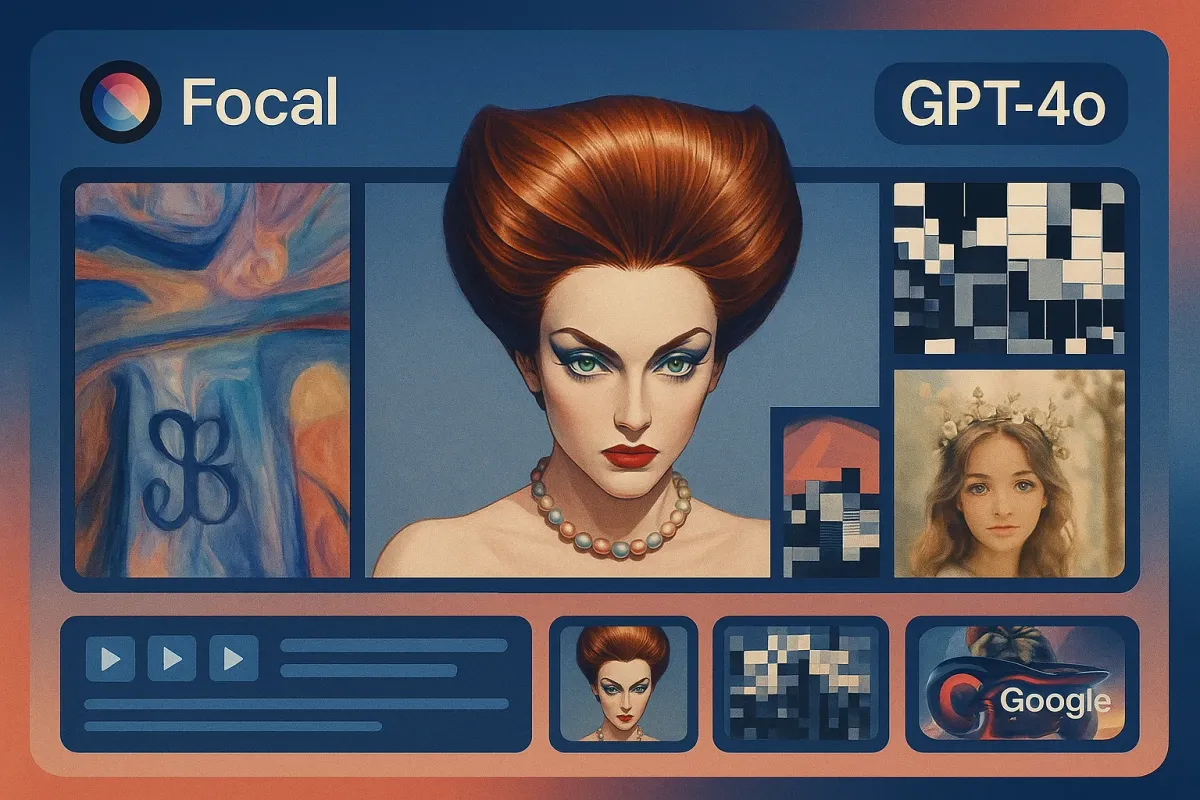

AI tools are evolving fast—but they don’t have to be overwhelming. At Focal, we’re building the future of video storytelling by combining the power of cutting-edge models like GPT-4o with an intuitive, in-browser video editor. No extra tabs. No technical hurdles. Just one tool to write, visualize, and shape your story from start to finish.

If you're new to GPT-4o or wondering how it fits into Focal, this guide is for you.

What is GPT-4o—and Why Should You Care?

GPT-4o (short for “omni”) is OpenAI’s latest multimodal model. That means it doesn’t just understand text—it also handles images, audio, and even live conversations. It's a huge leap forward in making AI feel more natural, responsive, and creative.

In Focal, we’ve integrated GPT-4o where it makes the biggest impact for video creators:

- Scriptwriting that understands visuals

- Image-aware scene suggestions

- Contextual voiceover generation

- Smart revision suggestions based on your storyboard

What You Can Do with GPT-4o in Focal

Here’s how creators are already using GPT-4o inside Focal to level up their process:

✍️ Write Smarter Scripts

Start with a rough idea—or even just a title—and GPT-4o can help you shape it into a compelling narrative. Want your script to match a certain tone, audience, or format? Just ask. Because GPT-4o understands context, it adjusts to your needs without the back-and-forth.

🎬 Build Scenes with Context

Focal lets you upload images, sketches, or even previous cuts. GPT-4o can analyze what you give it and suggest new lines of dialogue, transitions, or scene setups based on what it sees—not just what it reads.

🎤 Generate Voiceovers on the Fly

Thanks to multimodal awareness, GPT-4o doesn't just write narration—it writes voiceovers that match your visual flow. When paired with ElevenLabs' voice model inside Focal, you get high-quality narration that feels like it was custom-written for your footage.

✏️ Iterate Faster

Need to tighten a scene? Shorten a script? Match pacing to mood? GPT-4o can revise based on video context—not just the script. That means fewer edits, smoother production, and a more aligned final cut.

Getting Started in Focal

No need to copy/paste between apps or sign up for multiple AI tools. Just log in to Focal, start a new project, and you’ll find GPT-4o features woven directly into your workflow.

To try it:

- Start a new script or video project in Focal

- Click “Generate with AI” in the script editor or storyboard view

- Add text, upload images, or select a scene for revision

- Let GPT-4o do the heavy lifting—from drafting to refining

Final Thoughts

With GPT-4o built directly into Focal, you're not just using AI—you’re collaborating with it. Whether you're crafting explainer videos, documentaries, or social content, GPT-4o helps you think faster, write smarter, and create with confidence.

This is just one of the many state-of-the-art models we’ve integrated into Focal—including LTX-V for video, ElevenLabs for voice, Luma for 3D scenes, and more.

If you’re ready to explore the future of storytelling, you’re already in the right place.

Try GPT-4o inside Focal to combine voice, visuals, and video generation in one flow. Great for scripts, scenes, and smart edits.

📧 Got questions? Email us at [email protected] or click the Support button in the top right corner of the app (you must be logged in). We actually respond.