AI Video for Accessibility Automated Sign Language, Audio Descriptions, and Multilingual Dubbing

Modern video accessibility has become a creative advantage. AI-powered automation now enables instant sign language overlays, natural audio descriptions, and multilingual dubbing. What once required entire production teams now happens in real time, allowing creators to reach every audience with inclusion built into every frame.

The New Standard of Inclusive Video Experiences

Accessibility is transforming into a measure of quality. Viewers today expect equal access, not optional subtitles or extra versions. Automated accessibility technologies make this possible with minimal friction.

Key outcomes of inclusive AI video:

- Wider audience reach across hearing, vision, and language differences

- Improved engagement through personalization

- Stronger reputation and social credibility

- Lower production and translation costs

Inclusive media now drives retention and visibility just as much as creativity.

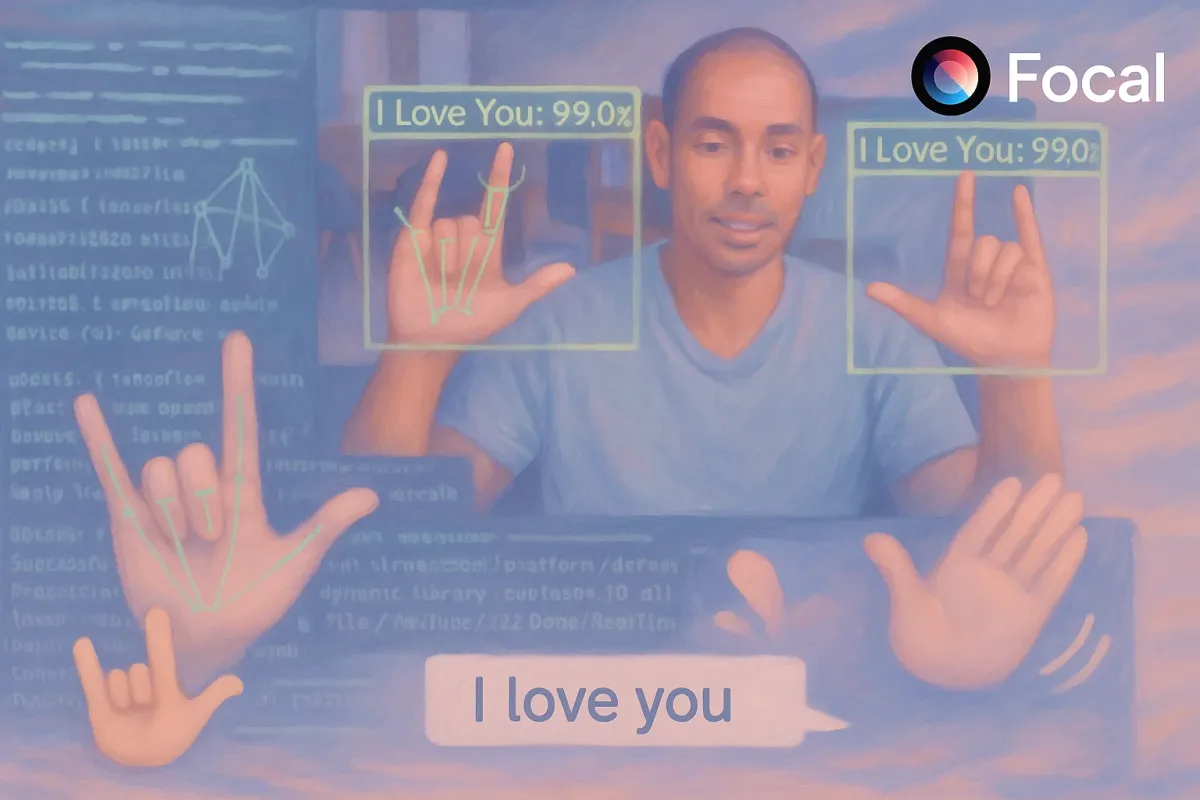

Automated Sign Language Transforms How Viewers Understand Content

AI sign language generation converts speech into synchronized visual gestures using virtual interpreters. The results are fluid and expressive, allowing deaf and hard-of-hearing audiences to fully experience tone and emotion without manual post-editing.

Comparison of sign language workflows

| Feature | Traditional Method | AI Automated Process |

|---|---|---|

| Interpreter requirement | Human on-set or in-studio | Automated generation |

| Editing time | Long manual synchronization | Real-time alignment |

| Scalability | Limited to one or two languages | Multiple sign languages supported |

The outcome is more than translation—it is visual empathy.

Audio Descriptions That Turn Visuals into Narrative

Audio descriptions generated by AI analyze visual content and create natural voice narration describing actions, emotions, and scene transitions. This goes far beyond basic script reading.

Audio description examples:

- Describing facial expressions or silent reactions

- Explaining setting and movement within scenes

- Identifying background actions relevant to context

The goal is to let visually impaired audiences experience emotion, suspense, and tone exactly as others do.

Multilingual Dubbing That Keeps Emotion Intact

AI-based dubbing allows speech to be translated and reproduced with matching tone and rhythm. Rather than sounding artificial, modern systems preserve emotion and character intent across multiple languages.

Benefits for creators and organizations:

- Consistent emotional delivery across languages

- Rapid localization for education, marketing, or entertainment

- Reduced production cycles without sacrificing quality

This enables a single video to sound authentic in every region, removing the old divide between original and translated versions.

Accessibility Features That Enhance SEO and Viewer Retention

Accessible videos are not only inclusive but also search-optimized. Automated transcripts, sign language, and audio descriptions increase content visibility and relevance.

SEO performance advantages:

- Rich metadata and transcript-based keyword indexing

- Increased session duration and reduced bounce rates

- Enhanced discoverability across video platforms and search engines

In accessibility, every feature also doubles as a performance boost.

How Different Sectors Benefit from Automated Video Accessibility

| Sector | Key Use Case | Primary Benefit |

|---|---|---|

| Education | Online lectures and learning videos | Equal participation for students with disabilities |

| Corporate | Training and onboarding content | Localization for global workforces |

| Media and Entertainment | Streaming and social platforms | Access for diverse and multilingual audiences |

| Public Services | Awareness and information videos | Compliance and inclusivity for all citizens |

Automation makes accessibility scalable, measurable, and beneficial to every industry.

Bringing It All Together with Smarter Accessibility in Your Videos

Making videos accessible is no longer a chore, it’s just part of creating something that feels real and inclusive. When your content automatically includes sign language, natural audio descriptions, and smooth multilingual dubbing, you’re not just reaching more people… you’re showing that everyone deserves a seat at the table. It’s that simple shift from “extra feature” to “essential experience” that makes your videos stand out.

Inside Focal, these tools work together so naturally that accessibility feels built in, not added on. You can choose a voice model, drop in subtitles, or have your video translated in minutes. Everything aligns so your message sounds clear, looks beautiful, and reaches the people who actually need it most.

Focal supports multilingual and audio-first creation. Build inclusive videos faster, whether you’re adding voice or visual clarity.

📧 Got questions? Email us at [email protected] or click the Support button in the top right corner of the app (you must be logged in). We actually respond.

Frequently Asked Questions

What is AI video accessibility and how does it work?

AI video accessibility means using artificial intelligence to make videos easier to understand for everyone, including people with hearing or vision disabilities. It works by automatically adding features like sign language overlays, subtitles, audio descriptions, and voice dubbing. These AI systems analyze the video content, identify dialogue and visuals, then generate accessible layers without needing manual editing.

How can automated sign language improve video accessibility?

Automated sign language improves video accessibility by translating spoken dialogue into visual gestures in real time. This gives deaf and hard-of-hearing viewers a natural and expressive way to follow the content. Instead of hiring interpreters for every video, AI systems can generate accurate sign language versions instantly, helping creators reach more people while keeping production costs low.

What are audio descriptions in videos and why are they important?

Audio descriptions are spoken narrations that describe what is happening on screen for viewers who are blind or have low vision. They explain actions, settings, and visual cues that aren’t mentioned in the dialogue. Including audio descriptions in videos makes content inclusive, ensuring that everyone can follow the story and feel emotionally connected to it.

How does multilingual dubbing help global audiences understand videos?

Multilingual dubbing helps audiences understand videos in their own language by translating and voicing content using AI voice models. The tone and pacing stay natural, so the emotional meaning isn’t lost. This feature is widely used by creators, brands, and educators who want to reach international audiences without recording multiple versions manually.

Can AI tools like Focal add subtitles and voiceovers automatically?

Yes, AI tools like Focal can automatically create subtitles and voiceovers for your videos. These tools use advanced speech recognition and voice generation models to produce accurate captions, multiple language voice tracks, and synced timing. This automation saves time and ensures that accessibility and localization are integrated directly into the creative process.

Why is video accessibility important for SEO and online visibility?

Video accessibility helps SEO because features like captions, transcripts, and multilingual audio make your content more searchable. Search engines index this extra text and metadata, which improves keyword coverage and discoverability. Accessible videos also increase viewer engagement and watch time, boosting rankings on platforms like YouTube and Google Video.

How do AI tools like Focal make accessibility easy for content creators?

AI tools like Focal simplify accessibility by combining features such as sign language generation, dubbing, and subtitle automation in one place. Creators can upload a video, select the accessibility options they need, and receive output ready for publishing. It removes the technical barriers that used to make accessibility expensive or complicated, helping every creator make inclusive content faster.